Can you use Gen-AI to make a drama short?

13 Mar 25

Ever since creating a Gen-AI walking talking Avatar for a KPMG ad last Christmas, I have to admit I’ve become quite obsessed with the idea of making an AI drama short. By that I mean a film with proper dialogue between characters and a sense of human emotion and story. For me what’s inspiring, is the notion of bringing to life ideas that have been destined to languish in Final Draft for lack of time and budget. In spite of all our fears around AI's potential to upend film making as we know and love it, the prospect of being able to just make a film without having to raise huge amounts of money first… it’s quite compelling!

Recently there was a great deal of chat around the Tilt studio about the brilliant ‘The Pisanos‘ Gen-AI Porsche spec ad. Like this, many of the stand-out projects we’ve seen so far, tend to lean into the strengths of Gen-AI – the ease of producing striking high production value visuals – but not necessarily attempting the bigger challenge of realistic spoken dialogue. Of course there are exceptions such as the recent The Ghost’s Apprentice Star Wars fan film made with Google DeepMind’s Veo 2, but there’s a largely empty space here waiting to be explored.

The reason? The ‘uncanny valley’ phenomenon I would presume. Coined in the 70s, this is the curve on a graph where humans connect with increasingly humanoid-looking characters (C-3PO for example) only up to a point, then the graph flips. When people encounter a near-but-not-quite-perfectly realistic human, this can induce a feeling of unease or revulsion – the uncanny valley.

Look at how cgi Princess Leia, was only given seven seconds of screen time and one word to say in Rogue One, or how audiences were divided over The Polar Express. The complexity of recreating human expression cannot be underestimated. Eyes – the windows to our soul – are are a big problem. We’re naturally attuned to human eyes. They convey emotion, intention, and life itself, so that even subtle imperfections can be easily detected, creating a sense of something being “off.”

But, at Tilt, we’re never ones to shy away from giving things a go, and so understanding these potential limitations, I set about trying to set free one of my pipe-dream films.

Five days; Two scenes; One creative. The challenge was set.

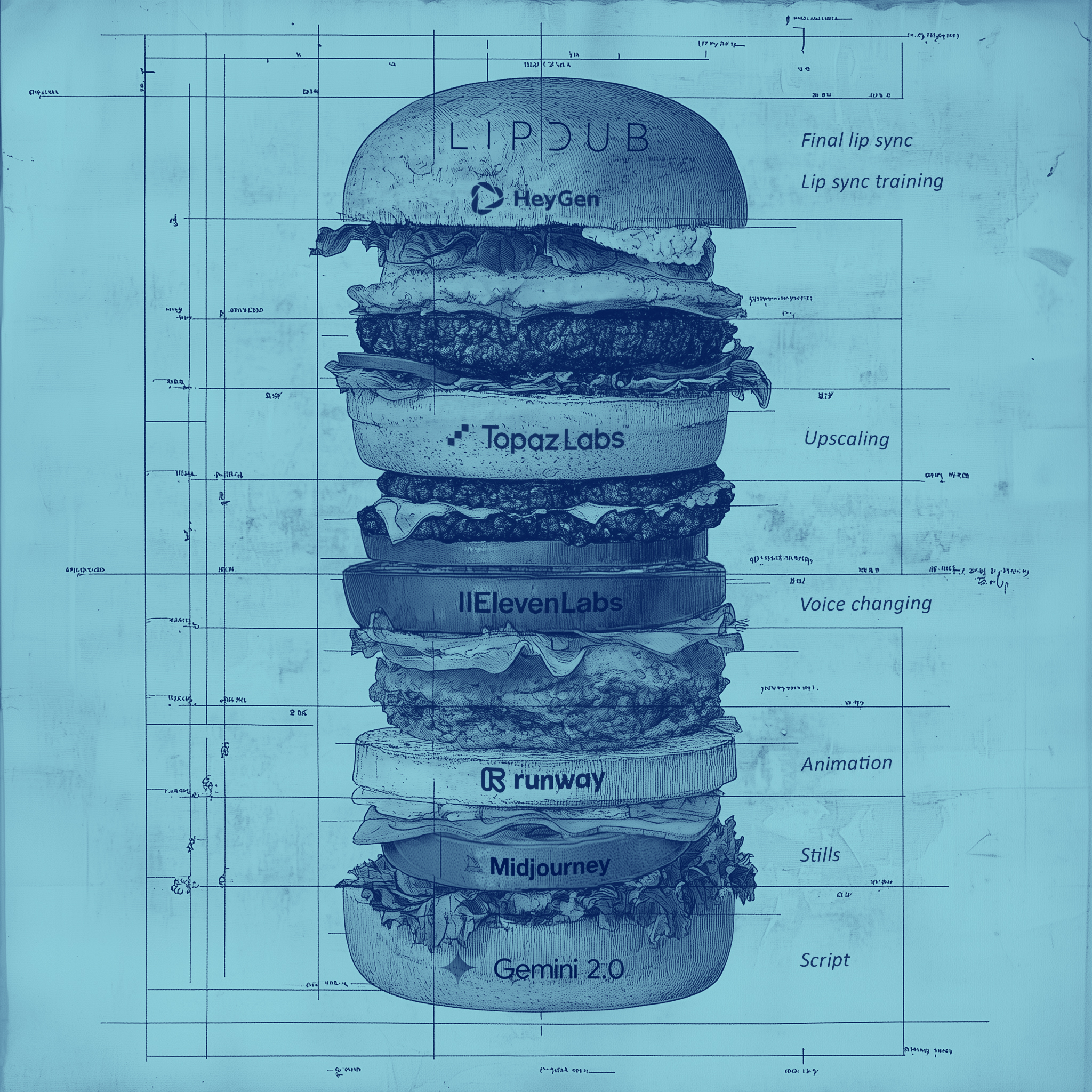

What’s in the creative stack?

I’ve written about ‘the creative AI stack’ in more detail previously, so I won’t linger too long on it. In a nutshell though, think of the stack like a traditional film production pipeline – script, storyboard, shoot, edit, cgi, grade etc. – but using a variety of interdependent AI production specialists rather than human ones. This is how it looked for this project:

The process

Script-wise, this was my chance at last to resurrect an old screenplay. The one I chose was ‘In Blood’, about an estranged father and daughter who are thrown together – rebuilding their relationship by unpicking a family secret from the past (skip to the end if you want to take a look at the results). I’d already pieced together all the beats and character development, and now I used Google’s Gemini to help flesh out the actual dialogue. As expected, this needed my human brain to finesse, but Gemini served well to quickly sequence the scene in various ways to see which worked the best, saving several hours of head scratching. I liked how Gemini formatted it all correctly for Final Draft.

Midjourney still seems to be the best place to go to generate highly polished storyboard stills to use as start frames for the Gen-AI video clips. I started by designing Midjourney character references (–cref) for consistency of cast, curating shots of every key character from multiple angles. The best of these were used to inform the generation of the storyboard stills.

When selecting the best storyboard shots to be animated, you really need to consider the small details. A rogue collar, hair parting, or button configuration can really scupper you further down the line, and you don’t want to be manually keyframing these details in post. For example, I spent time in Photoshop fixing clothing variations, and adding seatbelts to the car scene. In spite of describing each seatbelt in minute detail, these were a complete mystery to Midjourney’s algorithms deep in the black box – seatbelts over shoulders, around necks, or even as fashion adornments, but never keeping someone safe in their seat.

In fact, I rarely achieved the perfect shot straight out of the box, instead combining elements from multiple images and making good use of Photoshop’s AI Content-Aware Fill to achieve the vision. Another hack – because Midjourney character references only apply to a single person in a shot – was to generate the car two shot using one –cref, and then use the Vary (Region) tool to change one side of the image with a different –cref prompt.

In terms of generative video, we’ve been seeing tantalising examples of the capabilities of Google Deepmind’s Veo 2 (the fake Porsche ad for example, or the beautiful Kitsune animated short) but it’s early days and the technology was not available in the UK at this time. I made the decision to animate solely with Runway’s Gen-3 for a consistent look, which I guess in some ways is like choosing a camera and lens combination. As expected, you need to generate a lot of content to find the useable gems. Trying to control the unruly whims of Gen-3 can feel like attempting to direct a circus troupe on acid!

When it came to the dialogue, I generated many clips of the characters talking, gesturing, listening, reacting and responding. These were selectively chosen and aligned with the voiceover in the edit where they seemed to best convey the desired character movement and emotion of their dialogue.

The biggest issue I faced was trying to achieve realism with the character who is driving. I realised quickly that a car scene was probably a bad choice. For some reason, the car would often be reversing – not ideal on the motorway – and while a passenger can look anywhere they want to while talking, the driver must remain mostly looking at the road. The complexity of this – like the seatbelts in Midjourney – was something that Gen-3 found hard to achieve without the renders portraying either a seriously dangerous driver, or a very wooden one. I would say this is my biggest criticism of the final result.

My favourite way to shoot car scenes in real life is by using a car mount to view your characters by looking in through a window, with the reflected world drifting by out of focus in the glass – used to great effect in the film Locke by Steven Knight. As you might guess, Gen-3 struggled with this complexity. It could mimic very well the flickering shadows of intermittently passing trees or objects that temporarily eclipse the bright reflections, but the bright reflections themselves would not be moving at all. In the end, I motion-tracked the car movements in After Effects, and generated ‘reflection POV’ clips with Gen-3 to overlay on a semi-transparent layer – offsetting the keyframes a little and scaling up slightly so there was some parallaxing of the foreground reflections against the more distant scene objects.

Sound

In reality, just like a Pixar film, you’d want to use real actors’ voices, but the challenge was to make this film on my own. Instead of text-to-speech, I recorded scratch audio of myself acting out all the character parts, and using ElevenLabs to change my voice to each of the characters. The process seemed to work very well apart from my unfortunate lack of ability to act, but I guess you could bring in a single versatile actor to deliver all of the lines.

Similarly to the AI film we created for KPMG, I used the combination of HeyGen and LipDub to respectively create the training clips and end result lip sync. You can read about the process in my previous article. For some of the more active deliveries for the character Maia, I ensured we had many different HeyGen training clips from that particular scene, just in case LipDub struggled to figure things out.

The last stage of the process was all the finesse that helps bring realism and a cinematic aesthetic – cgi clean-up, music and sound design, letterboxing, colour grade, adding film grain etc. so it all seemed more polished and less digital. In fact I loved the fact that this really felt like creative human endeavour working hand-in-hand with AI as opposed to the machine doing everything for me.

Conclusion

I very much enjoyed the challenge of bringing a dramatic story to life through AI, and feel very inspired to continue my experiments – and perhaps finish making In Blood. Yes I created a thing in five days, but not without a great deal of human effort and experience in parallel. Did it work? Well…kind of. You can see we’re definitely not 100% there yet. There’s no getting away from that AI look – although I’m sure this will improve exponentially with every updated model. And if not uncanny valley, there’s a distinct glassy-eyed stiffness to the performances and lack of subtle nuance that real actors bring. Again, I’m sure this will get better with time, and to say this was made by one person in a very short time, armed only with a bunch of Gen-AI credits, to me it’s mind-blowing.

So are we about to see the end of filming with crews and cameras? I can’t see it somehow. Personally, this project has confirmed my belief that Gen-AI will radically change many aspects of our industry, and is already doing so. But not all of it perhaps. If you can make a great Mini ad or Pixar film with Gen-AI, or create cgi in a new way – then why not. These are the places of suspended reality, and I don’t think audiences will really care whether-or-not the production process is human or machine, if they can’t tell the difference. But when it comes to lifelike dramatic human storytelling, I think that actual people, captured through glass to a silicon sensor, will most likely prevail – used of course in combination with AI technologies.

To my mind, we seem to be moving into an era where many of us like-it-or-not, must increasingly become specialist directors of AI, as opposed to being production specialists. As both a long-term director and generalist, to me this feels like an exciting evolution of the craft, that used in the right way can only further our storytelling abilities, but I understand this can be a painful notion to many experienced creatives. My fear is that as Gen-AI production becomes the norm, we lose our ability to understand what is good and bad – letting the GPUs do that for us. Then we really will have thrown the humans out with the bathwater.

Watch ‘In Blood’ below:

Thanks for reading. If you’d like to chat about how creative AI can help you, then please get in touch.

SHARE: